手机端部署deepseek模型-1.5B

手机端部署deepseek模型-1-5B

简介:用ggml中的llamacpp部署deepseek1.5b在手机端,使用cpu运行大模型。

实验过程(手机端推理时间测试)

横着是prompt长度,纵轴是影响时间

实验思路就是找一组prompt,丢给它,从它生成回复到结束的时间,计时下来,然后放到excel表中,或者拿Python或excel内置的图表,绘一个折线图

本来和量化都要,本来的有一条折线,量化一条,不同的量化方案对应不同的折线

量化方案选取:

无量化

./build/bin/llama-cli -m DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-F16.gguf -cnv

Q2_K

./build/bin/llama-quantize DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-F16.gguf DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-Q2_K.gguf Q2_K

./build/bin/llama-cli -m DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-Q2_K.gguf -cnv

Q4_K_S

./build/bin/llama-quantize DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-F16.gguf DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-Q4_K_S.gguf Q4_K_S

./build/bin/llama-cli -m DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-Q4_K_S.gguf -cnv

Q4_K_M

./build/bin/llama-cli -m DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-Q4_K_M.gguf -cnv

Q5_K_S

./build/bin/llama-quantize DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-F16.gguf DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-Q5_K_S.gguf Q5_K_S

./build/bin/llama-cli -m DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-Q5_K_S.gguf -cnv

Q5_K_M

./build/bin/llama-quantize DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-F16.gguf DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-Q5_K_M.gguf Q5_K_M

./build/bin/llama-cli -m DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-Q5_K_M.gguf -cnv

输入一组prompt:

1您好12

2你是谁?13

3请你简单介绍一下深度求索公司。18

4请你生成一段c语言代码,用于实现冒泡排序。23

数据:

暂不可见

参考资料:

1纯新手教程:用llama.cpp本地部署DeepSeek蒸馏模型 - 知乎

3手机端跑大模型:Ollma/llama.cpp/vLLM 实测对比_vllm ollama 对比-CSDN博客

部署过程:(主要参考知乎)

1手机下载aidlux

2电脑登陆执行操作

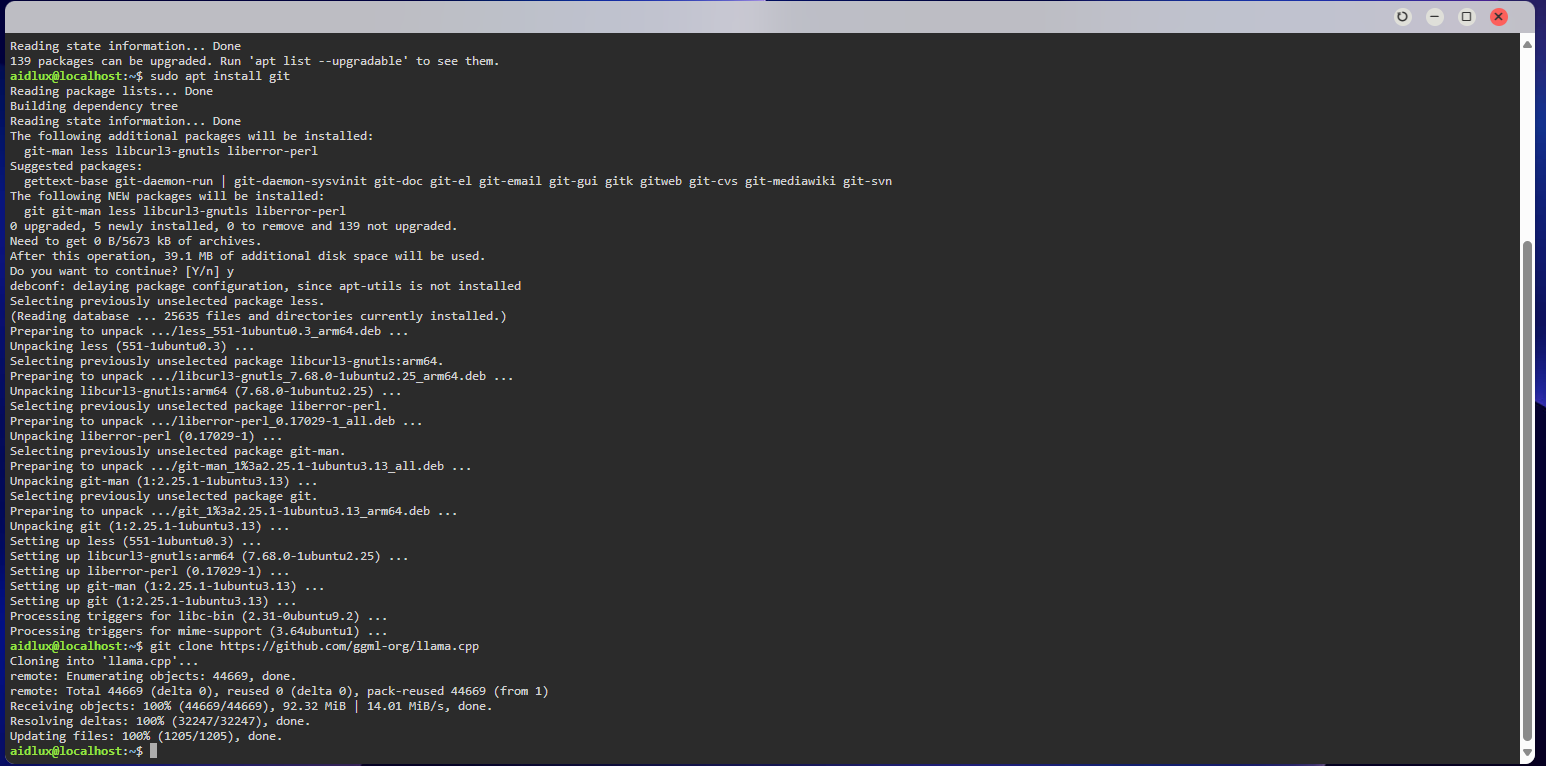

3安装git、cmake

4

modelscope download —model deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B —local_dir DeepSeek-R1-Distill-Qwen-1.5B

5

python3.8 -m pip install —upgrade pip

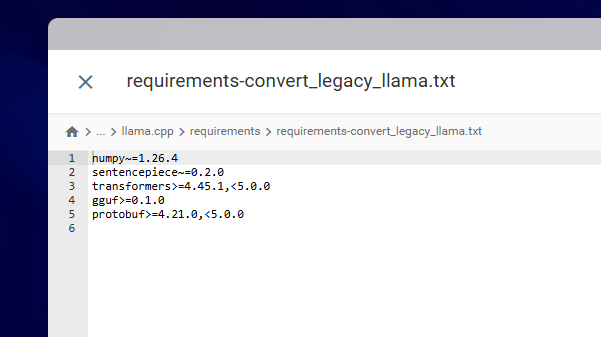

6 numpy1.26.4-》1.24.4

python3 convert_hf_to_gguf.py DeepSeek-R1-Distill-Qwen-1.5B/

7

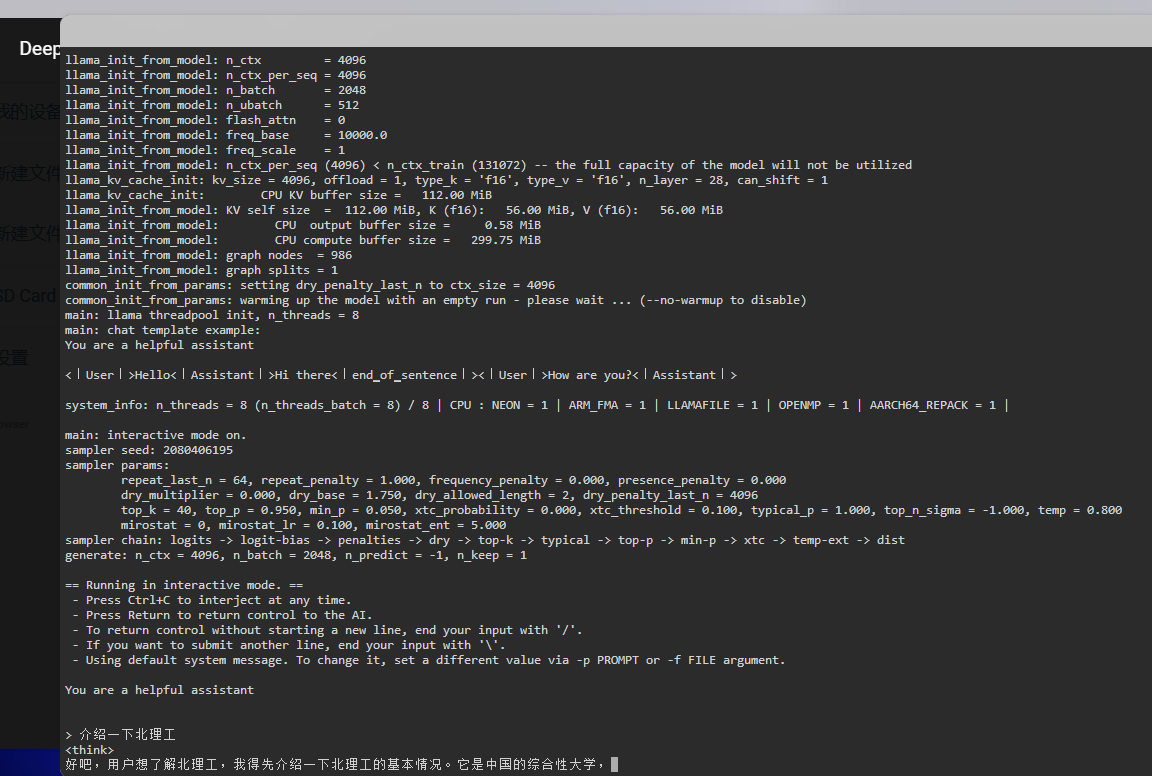

命令行运行

./build/bin/llama-cli -m DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-F16.gguf -cnv

8

太慢了,量化一下

./build/bin/llama-quantize DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-F16.gguf DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-Q4_K_M.gguf Q4_K_M

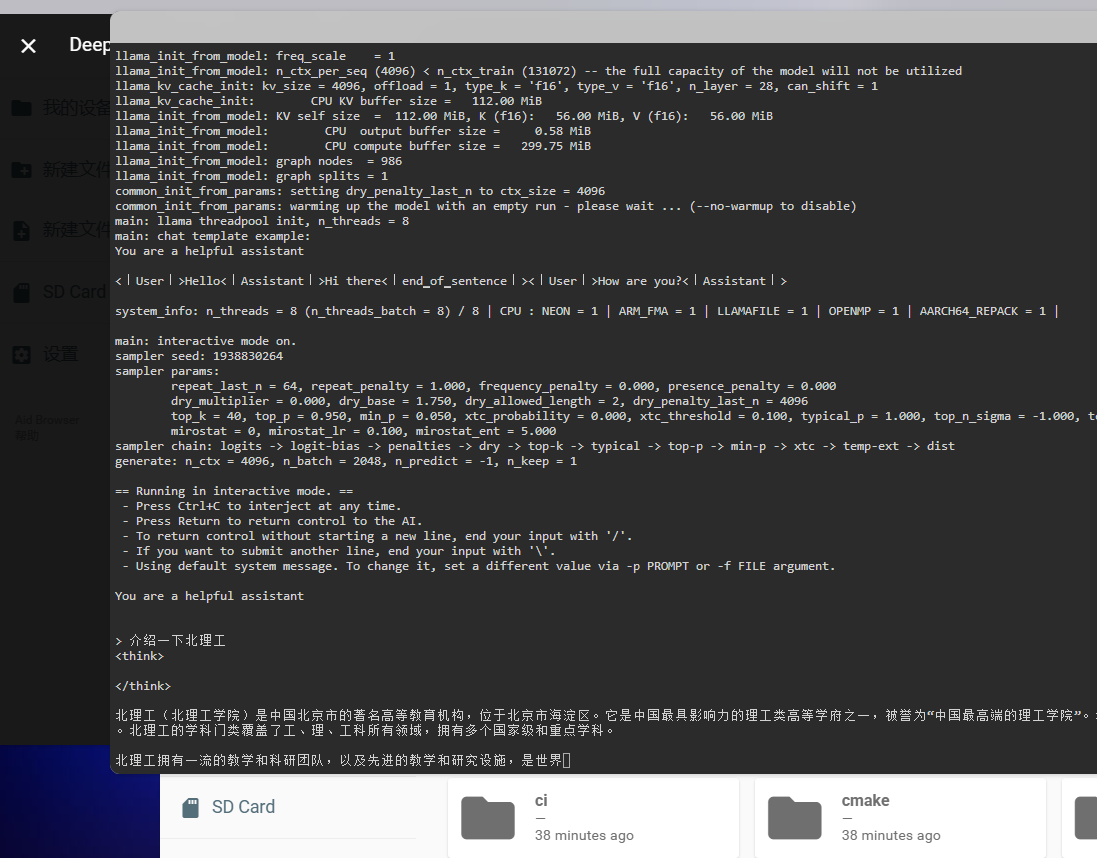

9

命令行运行

./build/bin/llama-cli -m DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-Q4_K_M.gguf -cnv

运行成功

10HTTP Server方式

./build/bin/llama-server -m DeepSeek-R1-Distill-Qwen-1.5B/DeepSeek-R1-Distill-Qwen-1.5B-Q4_K_M.gguf —port 8088

(我电脑上暂时看不见,可能是防火墙问题,就先不纠结这个了